Not a month has passed since the celebration of Pandas Profiling as the top-tier open-source package for data profiling and YData’s development team is already back with astonishing fresh news.

The most popular data profiling package on every data scientist’s toolbelt now also supports Spark DataFrames, confidently entering the Big Data landscape with a flashy new coat. Look out, ydata-profiling is on the radar.

This beloved data profiling package has been the go-to tool for a huge community of data scientists for quite some time: it serves them swiftly and efficiently by enabling a comprehensive understanding of their data, from summary statistics and visualization to the mitigation of inconsistencies and potentially critical data complexities (e.g., missing and imbalance data).

These features have consistently been the stepping stone for guaranteeing high-quality data to train and deploy models for real-world applications and the reason why it has been so widely adopted across a growing number of organizations.

Yet, this growth naturally calls for another “must-have” in the package: scale.

In this new release, ydata-profiling introduces new opportunities for data profiling in terms of scalability and integration, opening up the community to other data professionals beyond data scientists and analysts – especially data engineers.

In the following sections, we will cover the story behind the new name as well as why and how to leverage Spark DataFrames to take your data profiling and quality initiatives to the next level.

New year, new face, more functionalities!

Although Pandas DataFrames are widely known among the data science community, they are limited for increasing volumes of data. Starting with Spark DataFrames, this new release opens the possibility to consider other types of data structures in future development.

Hence, to highlight this new opportunity and detach from the idea that Pandas DataFrames are the only solution for data manipulation, the “pandas-profiling” package was renamed to “ydata-profiling”.

The name stands for “Why data profiling?”, which is a naive but important question to be answered within the Data-Centric AI paradigm: fully understanding the data intricacies of the domain at hand and rapidly diagnosing inconsistencies and complexities that need to be addressed prior to model development.

Spark opens the door for data profiling at scale, i.e., enabling the assessment of data quality for large datasets by efficiently supporting their processing, which was not possible up until this moment.

Spark Profiling: “We’ve been eagerly waiting for it!”

The support of Spark DataFrames has been the top most requested functionality by the community, especially those responsible for maintaining larger and more complex data science projects and infrastructures: Data Engineers.

Whereas data scientists need quick-and-easy tools to iteratively explore datasets and look for unknown insights and trends in data, data engineers work on big data analytics efforts which requires them to process and analyze huge chunks of data, often in real time. That calls for scale!

Because Pandas DataFrames and in-memory data processing are not ideal for larger data workloads, data engineers have been resorting to workarounds, hacking their way into using Pandas Profiling by randomly subsampling their data and profiling only a subset, while performing certain computations on the backstage (e.g., calculating basic statistics such as maximum and minimum values).

Surely, this comes with a hidden cost: the returned subsample needs to be representative of the data, which is impossible to know beforehand when we simply don’t have a basic understanding of the data yet.

This is precisely the advantage of ydata-profiling: a package with a seamless integration with different data structures – such as Pandas and Spark DataFrames – that allows both in-memory and at-scale data profiling in a single line of code.

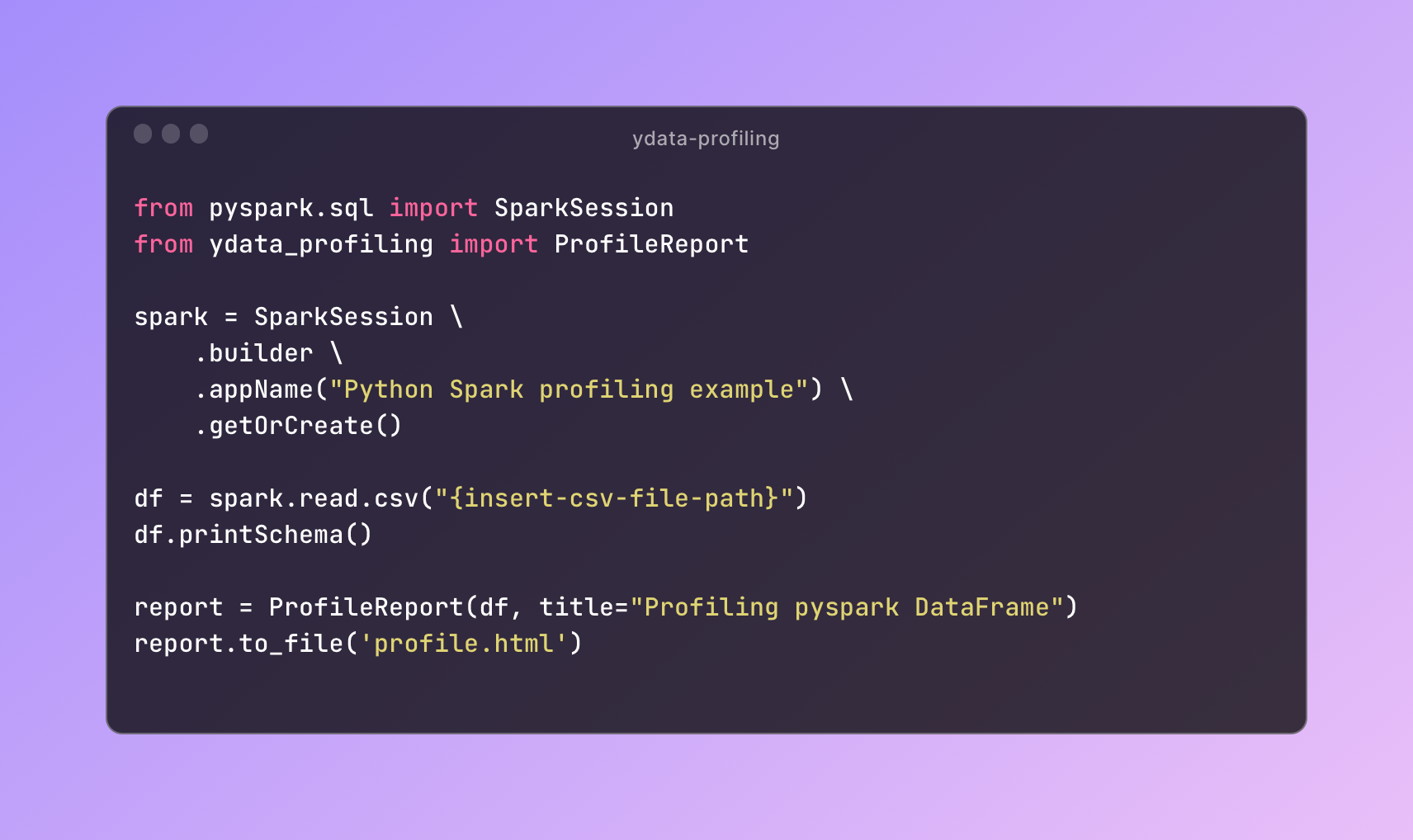

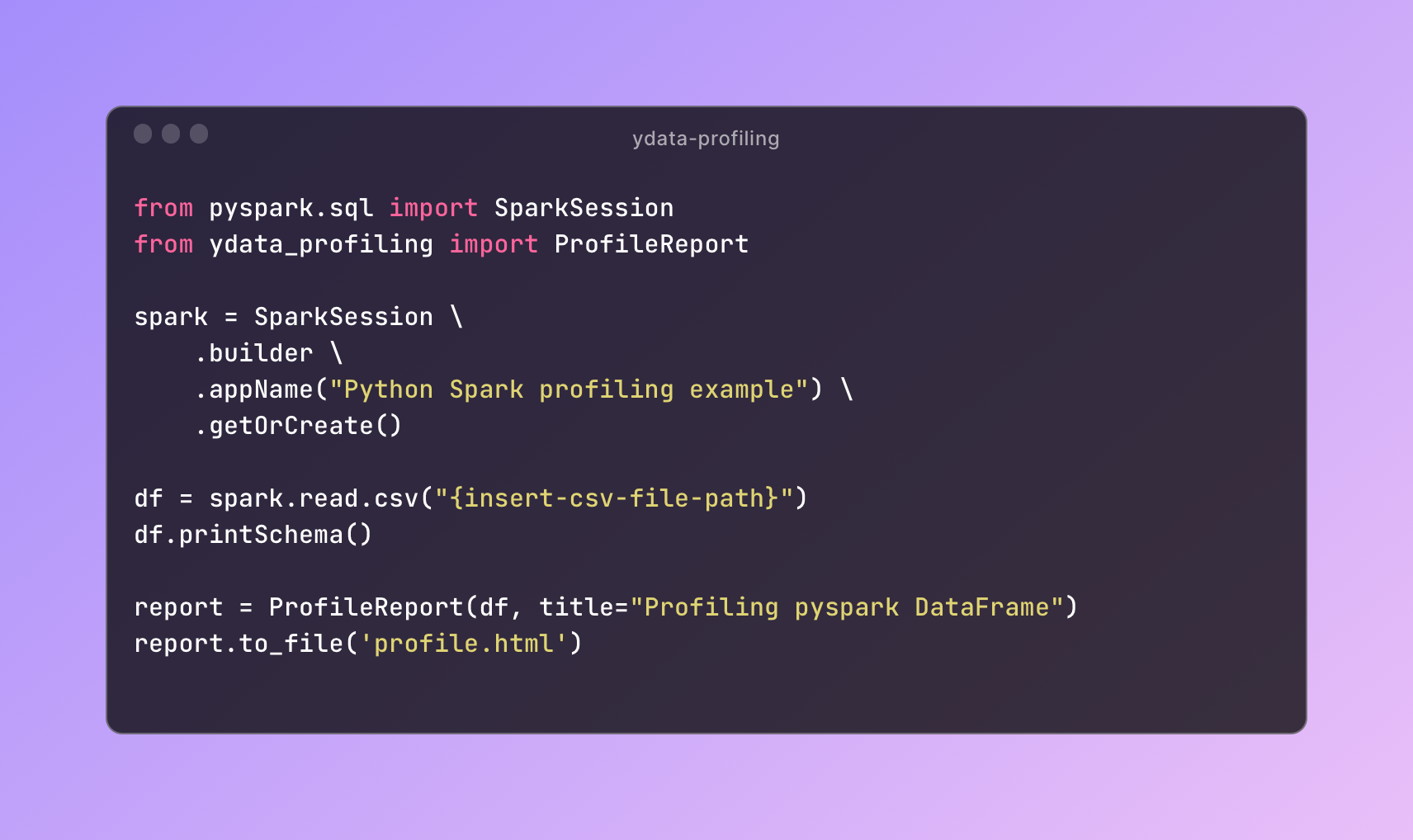

Here’s a quickstart example of how to profile data from a CSV leveraging Pyspark engine and ydata-profiling:

Transforming Big Data into Smart and Actionable Data with Profiling at Scale

With data being continuously produced nearly every millisecond of every day, organizations have access to millions of records to feed their increasingly large and complex data lakes and warehouses. With such a multitude of sources of data, adopting tools such as Spark to process large chunks of data made sense. Yet, it did not solve the issue of what data is being handled and why.

In other words, Spark adds scale, though not data understanding and interpretation – these are elements of data profiling.

ydata-profiling is the interpretability cherry on top of the scalability cake. With this new release, it empowers every team, from data scientists to data engineers, with the quintessential profiling tool that allows:

-

A quick and visual support for Spark DataFrames validation;

-

The diagnosis and assessment of both small and large volumes of data, helping organizations to define better data management practices based on a unified understanding of the quality of their data assets;

-

The development of continuous practices of data profiling, with a tool that is easy to integrate into existing big data flows built with Spark and Hadoop ecosystems, which helps troubleshooting data flows through the identification of business rules that were not met, or through the understanding of how the data is being merged from distinct sources. And last but not the least:

-

An interchangeable and comparable report, that aligns the expectations and communication between the different data teams inside an organization: data engineers, data scientists, and data analysts.

With ydata-profiling, data teams can now operate with more advanced data flows, producing detailed snapshots of their data and rapidly adjusting development efforts as requirements and conditions change (e.g., data drifts, misalignment with business objectives, fairness constraints).

The future is bright with ydata-profiling

With the scale boost brought by the integration with other data structures such as Spark DataFrames, ydata-profiling broadens the spectrum of use cases and possibilities around the application of data profiling, from exploratory analysis at scale to the validation of continuous streams of data processed within organizations.

Still, the development efforts won’t stop here. We are committed to making the future of data quality even brighter and more flexible and along the next releases, we plan on continuously evolving and improving the most loved python profiling package towards data scale and volume.

As always, feedback is welcome! Feel free to push ydata-profiling to the limit with your large-scale use cases and stay up to date with the latest developments through the project’s roadmap on GitHub.

Happy Profiling!