Synthetic Data for Aligning ML Models to Business Value

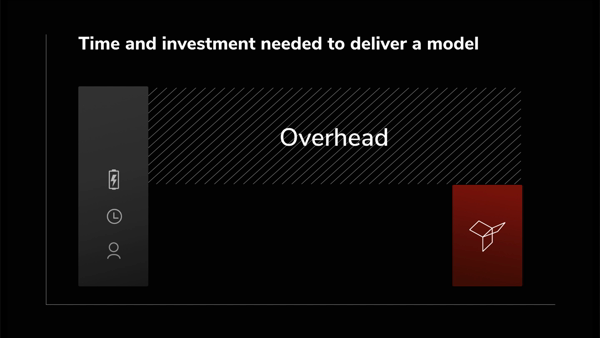

I improved a model to save a hypothetical auto insurance company almost $200 per claim! One of the biggest mistakes that junior data scientists make is focusing too much on model performance while remaining naive about the model’s impact...