Cover Photo by John Schnobrich on Unsplash

Data Engineering vs Machine Learning the differences and overlaps

Data quality is critical to both Data Engineering and Data Science, after all poor quality data can be costly quite costly for a business. Accordingly to Gartner poor data quality is estimated to cost $9.7 million per year to organizations.

While the overall goal is to ensure that data is accurate, consistent, and reliable, among other aspects (yes, it can go up to 70 dimensions!!), each field's specific requirements and objectives differ.

This article outlines the key differences between data quality for Data Engineering and data quality for Data Science while dissecting the practices, tooling, and the overall flow of maintaining high-quality data in each domain.

Data Quality for Data Engineering

Data engineering focuses on the collection, storage, processing, and management of data. The primary objective is to ensure that data is readily available, well-structured, and easily accessible for further analysis or processing. Data engineers are responsible for design and implement the systems and processes that are required to acquire, transform, store and deliver the data.

The overall goal? To make data available in a structured and consistent manner so it can be consumed by different downstream applications such as analytics or even machine learning models development.

So what are the top data quality requirements from a data engineering perspective?

-

Accuracy: it refers to the degree to which data reflects the true values or states of the entities/events that it represents. It measures the correctness and precision of the data. As a practical example, let’s say that the expectation is to collect and process sales transactions data from various sources, to ensure accuracy data validation checks can be implemented during the data ingestion process, to validate values expectations for certain fields, adheres to defined data types or even if the business rules are met.

-

Completeness: All relevant data points should be present, with no missing information that is relevant for the business. It ensures that the dataset contains the necessary information in its entirety, without gaps and omissions.

-

Consistency: it refers to the uniformity and coherence of data across different sources, systems or even time periods. It ensures that the data is synchronized, aligned and free from conflicts or discrepancies when integrated. A great example is the process of unifying the organization's data sources and information into a data warehouse. Activities such as data mapping, data transformations like aggregations, integrations and even monitoring are vital to ensure consistency throughout time.

-

Timeliness: can be translated into the availability and currency of data, ensuring that it is up-to-date and accessible when needed. Depending on the business this might refer to support real-time or even near real-time availability for decision-making.

Different tooling can be used to build and deliver proper data flows to serve different applications and data-driven initiatives. The choice of tools depends on the specific requirements, data columns and the technology stack within the organization.

Data Quality for Data Science

On the other hand, Data Science, or the development of Machine Learning (ML) solutions, involve training algorithms to learn patterns from the data, make predictions or even to optimize processes. High-quality data is essential for ensuring that ML models are able to generalize and produce accurate results. Although the data quality dimensions described in Data Engineering continue to play a role in Data Science, a lot more requirements are needed to ensure proper development of the data solution.

So what are the top 5 data quality requirements from a Machine Learning perspective?

- Completeness: refers to the degree to which the data used contains all the data, meaning there are no missing or incomplete variables as it can lead to biased or inaccurate outputs. Missing data can be mitigated with statistics imputation or even synthetic data, depending on the dataset complexity.

-

Relevance: selecting the more relevant problem being solved, containing the necessary data and features for a ML model to learn. Multivariate data profiling, feature selection, dimensional reduction or even data causality analysis are core to assess and select the most relevant data segment.

-

Balance: it refers to the expectation of a balanced distribution between classes to avoid over representation or under representation of specific groups. A classical approach to deal with imbalance data is augmentation.

-

Variability: it is critical that data exhibits sufficient variability to capture the complexity of the problem and prevent over-fitting. This is one of the most challenging aspects of data quality for Machine Learning - how to ensure that enough representative data a certain application exists? Insufficient data may require approaches such as data augmentation, transfer learning or even to leverage pre-trained models.

-

Cleanliness: Data should be free from noise, outliers, and inconsistent label.s The presence of noisy data can hinder the learning process. While data engineering can help to detect some types of noise, Machine Learning requires additional robustness delivered by regularization, outlier detection or even unsupervised ensemble models.

It's import to note that the tooling landscape for machine learning data quality is continuously evolving, and new tools and technologies emerge regularly. The choice of tools should be based on the specific data quality challenges, the technology stack, and the requirements of the machine learning project.

The data lifecycle and the modern data teams

Modern data teams are cross-functional groups that collaborate to manage and derive value from data. Although in the article we have focused on comparing data engineers with data scientists, truth to be told, the modern stack and organizational needs are leading to re-inventing other roles such as data analysts.

Each and every role has different needs and responsibilities in what concerns data quality. And although they do overlap, they act at different stages of the process of extracting value from data.

- Data analysts perform data analysis, generate reports, and provide insights to stakeholders

- Data engineers focus on data infrastructure, data integration, and data pipeline development

- Data scientists leverage statistical and machine learning techniques to extract insights and build predictive models.

Collaboration between these roles is crucial for the success of data projects, with shared responsibilities for data governance, quality assurance, and ensuring the data meets the needs of the organization.

Conclusion

While both data engineering and machine learning rely on high-quality data, their specific requirements and objectives differ. By understanding these differences and implementing best practices, organizations can ensure they have the right infrastructure and tooling for the development of data-driven solutions while ensuring collaboration for better data quality. This will result in better insights, more accurate predictions and improved decision making.

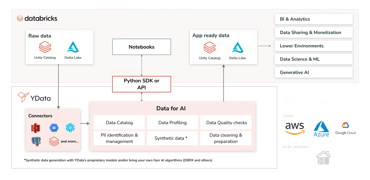

YData built an ecosystem of tooling so data scientists can ensure high-quality data for their projects. Fabric is the first Data-Centric AI workbench with automated data quality profiling and synthetic data generation. Join the growing community of data scientists who trust YData Fabric to accelerate their machine learning projects. Try YData Fabric today, and experience less time spent on manual profiling and improved productivity.