Photo by Roman Synkevych on Unsplash

A probabilistic approach to fast synthetic data generation with ydata-synthetic

To find synthetic data generation within the same sentence as Gaussian Mixture Models (GMMs) sounds odd, but it makes a lot of sense!

To better understand why, let's first deep-dive into the nature of Gaussian Mixture and Probabilistic models and how they can be used not only to generate data but to be able to do it fast and without the need for computational infrastructure, much like we would need to train a Generative Adversarial Net (GANs) or a Variational Autoencoder.

In this blog post, I'll cover the why and how to generate synthetic data with GMM models by leveraging open-source. Follow me into the world of probabilistic models!

What are probabilistic models?

Probabilistic models are mathematical models that capture uncertainty by incorporating probabilistic principles. They are used to represent and reason about uncertain events or processes. In a nutshell, probabilistic models can explain events and/or processes by modeling them through probability distribution and the likelihood of a specific event occurring.

These probabilistic models have many advantages and excellent characteristics hence why they are so relevant for statistical analysis of even Machine Learning solutions. One of the main reasons that make them so popular is that they provide a natural protection against one of the most feared problems in ML, over fitting, which makes them quite robust even in the presence of complex forms of data.

Probabilistic models are divided into two main types:

-

Generative: Generative models describe the joint probability distribution over both observed and unobserved variables. They can be used to generate new samples similar to the original data (where have I heard this before?). Examples of generative models include Gaussian Mixture Models (GMMs), Hidden Markov Models (HMMs), and of course, Generative Adversarial Networks (GANs).

-

Discriminative: Discriminative models focus on modeling the conditional probability distribution of the observed variables given the input or context. We know them very well, as we leverage their capabilities for classification or regression tasks. Examples of discriminative models include logistic regression, support vector machines (SVMs), and deep neural networks (DNNs).

Now that we understand that Gaussian Mixture models are Generative Probabilistic models, without further ado, let's understand the pros and cons of this type of model and how we can leverage them to generate synthetic data.

A deep-dive into Gaussian Mixture models

Gaussian Mixture Models (GMMs) represent a dataset as a mixture of Gaussian distributions. In other words, GMMs assume that any observed data set is a combination of several Gaussian distributions.

Consisting of two main components (mixture components and mixing weights) are the individual Gaussian distributions that build the model, while the mixing weights determine the contribution of each element to the overall distribution.

GMMs aim is to estimate the parameters that describe the mixture components and mixing weights from the given data. This estimation is typically performed using an algorithm called Expectation-Maximization (EM). The EM algorithm iteratively updates the parameters to maximize the likelihood of the observed data given the model.

Once we have a trained GMM, it can be used for various tasks, from clustering to data generation (yes!). Because of their generative nature, GMMs can generate synthetic data by sampling from the learned distribution. By randomly selecting a component according to the mixing weights and sampling from the corresponding Gaussian distribution, synthetic data points are drawn from the learned distribution, ensuring that the new data mimics the characteristics of the original data.

GMMs are flexible, but not everything is rosy - assumptions! Yes, behind the concept of GMMs, there is the assumption that a mixture of Gaussians can well represent the underlying data, which is not the case for many real-world datasets.

GMMs compared to other Generative models

So you are probably wondering - How do they compare to other models such as Generative Adversarial Nets (GANs)?

Truth be told, although GMMs and GANs share the fact that both are generative models, the similarities end there. These models employ different approaches when it comes to learning and generating events.

GANs are not probabilistic: they are deep learning models with two main components (a Generator and a Discriminator). They are trained through an adversarial learning process where the generator and discriminator compete and improve iteratively. New data is generated by inputting random noise into the generator, introducing interesting variability to the synthetic data.

On the other hand, and as we have seen previously, GMMs assume that the data follows a Gaussian distribution and generate new data points from a combination of several Gaussian Components, each characterized by its mean and covariance.

But though both types of models are great, they also come with some challenges:

- GMMs are fast, easy to train, and don't require specific computational acceleration through GPUs. Nevertheless, the quality of the generated data depends a lot on the correct validation and adequacy of the model to learn the complexity of the original data.

- GANs are known for generating high-quality data but also for being data savvy and suffering from issues like mode collapse. Their training demands computing power, and using GPUs is highly recommended.

In summary, the choice between GMMs and GANs depends on the specific requirements of the synthetic data generation task, downstream applications, as well as the quality and characteristics of the underlying data distribution.

There is no one size fits all. It depends!

Get started with ydata-synthetic new Generative model based on GMMs

With version 1.1.0 from the ydata-synthetic package, we can find a new generative model that delivers a reliable yet fast generation of synthetic data without needing GPUs. Bear in mind to always double-check your original data assumptions!

Quickstart by installing ydata-synthetic in a pyenv or conda environment with the following snippet:

Install ydata-synthetic package

Let’s deep drive on how we can generate synthetic data with Gaussian Mixture models. First let’s import the classes and objects that we need:

Import needed objects and functions from ydata-synthetic

The adult census dataset can be automatically downloaded as part of the example by fetching the data with the method fetch_data from the pmlb package.

It is essential always to define which columns are numerical and which are categorical so the solution runs the proper data processing for the selected model. GMMs do not support categorical data by default, so some preprocessing is required. Also, we need to ensure that the data is as close to a Gaussian distribution as possible.

Setting numerical and categorical columns

Training the synthesizer is as simple as follows in the code snippet below - a RegularSynthesizer is instantiated with a modelname=’fast'.

Creating and training the synthesizer

Embedded into the GMM based synthesizer is an algorithm based on the Silhouette score to select the optimal number of components given a Synthesizer.

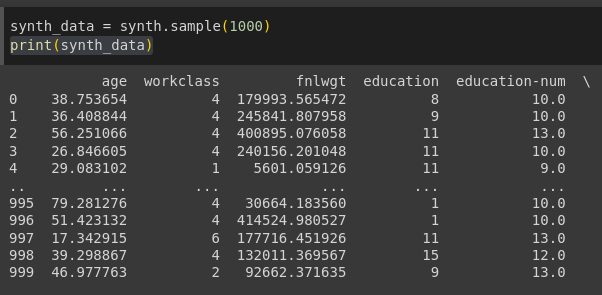

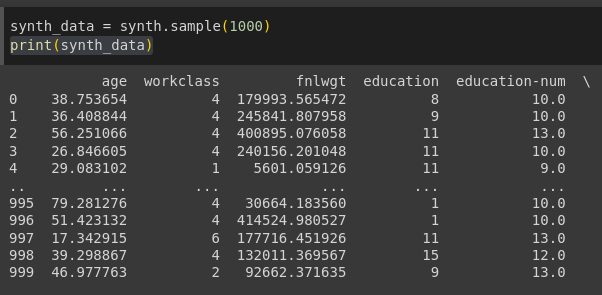

With the training completed, we can sample as many rows as possible based on the real-data learned distribution.

Generating of synthetic data sample with 1000 rows

And finally, we have successfully generated our first synthetic database while using GMMs!

Synthetic data sample screenshot

Synthetic data sample screenshot

You can find the complete code to run this example in this Google Colab!

Conclusion

There is no synthetic data generation model that is one-fits-all - different data have different needs, and different use cases might have additional requirements (e.g. explainability). Gaussian Mixture Models and other Probabilistic Generative models have already shown to be quite flexible and reliable when it comes to synthetic data generation. But, when it comes to scale, variability, and replicating more complex data patterns without being concerned with model assumptions, Deep Learning models like GANs are the way to go!

If you want to learn more about synthetic data, join the Data-Centric AI community, a place to discuss all things about data!

Cover Photo by Roman Synkevych on Unsplash