Data quality plays a crucial role in the success of machine learning projects. In the realm of artificial intelligence, where algorithms learn from data to make predictions and decisions, the quality of the input data directly impacts the accuracy and reliability of the models. In a series of videos and blog produced with the Data-Centric AI community, we are going to explore the most common issues in what concerns the data quality and how to overcome those challenges.

In this blog post we are going to address one of the most fundamental yet often overlooked challenges in machine learning: imbalanced data. This issue can significantly impact the accuracy and efficiency of your algorithms, making it essential for any ML enthusiast or professional to understand.

Imbalanced data

Imbalanced data refers to a situation where the classes or categories in a dataset are not equally represented, with one class overshadowing the others. It's more common than you may think, as we encounter it in various real-world scenarios like credit card fraud detection, rare disease diagnosis, and anomaly detection in manufacturing.

The impact of imbalanced data on machine learning algorithms is profound. Algorithms learn from well-represented concepts, meaning they tend to focus on the majority class and ignore the underrepresented ones. This leads to poor performance when predicting the minority class, ultimately affecting the overall accuracy of the model. Understanding the causes of imbalanced data is crucial for the development of performant and reliable Machine Learning solutions!

The technical content presented in this post, inspired by video below, is invaluable for those looking to improve their ML algorithms' accuracy, especially as imbalanced data becomes increasingly prevalent across various domain.

What causes imbalanced data?

Imbalanced data occurs when the distribution of classes within a dataset is unequal. In other words, one class has significantly more samples than the others. This disproportion can arise due to various reasons, including:

- Inherent nature of the problem: Some problems have a naturally skewed class distribution, such as fraud detection or rare disease diagnosis.

- Data collection bias: Biases in data collection processes can lead to an over-representation of one class and under-representation of the others.

Strategies for Handling Imbalanced Data

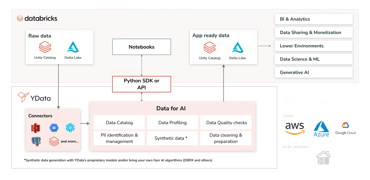

There are several techniques to deal with imbalanced data, including under-sampling, over-sampling, and synthetic data generation. Let's take a closer look at each:

- Under-sampling: This technique involves reducing the number of samples from the majority class to balance the class distribution. While it can improve the model's performance on the minority class, under-sampling might lead to loss of valuable information and reduced overall accuracy.

- Over-sampling: Over-sampling involves increasing the number of samples from the minority class to balance the class distribution. This can be achieved through replication or by creating new samples using methods like SMOTE (Synthetic Minority Over-sampling Technique) or even through the generation of synthetic with techniques such as GANs (Generative Adversarial Networks) and VAEs (Variational Auto-Encoders).

- Hybrid approaches combining the benefits of both under and oversampling!

It is also important to always choose the right metrics to assess the impact of your dataset balancing decisions. Classifier's accuracy shows the percentage of correct predictions out of all predictions made. While it works well for balanced data, it falls short for imbalanced data. Instead, precision measures how accurate the classifier is at predicting a specific class, and recall assesses its ability to identify that class. When working with imbalanced datasets, F1 score is an example of a more suitable and robust evaluation metric.

Conclusion

Dealing with imbalanced data is crucial for ensuring accurate and reliable machine learning models. By understanding the causes, challenges, and strategies for handling imbalanced data, you can improve your models' performance and extract valuable insights from your data. We encourage you to explore these strategies in your own projects and join the Data-Centric AI Discord server to share your experiences and learn from others in the community. Happy learning!